ArcGIS Survey123 is a mobile application for location-based, form-centric data collection that is part of the larger Esri ArcGIS platform. Researchers at University of Michigan use Survey123 for such activities as recording artifacts at archeological sites, identifying pollinator activity in campus gardens, and tracking balloon debris around the Great Lakes. Many of these use cases require in-depth knowledge of the survey subject, or are citizen science projects that aim to collect large amounts of data from participants with little or no training.

Incorporating artificial intelligence (AI) or machine learning models into surveys presents opportunities to both improve data quality by reducing manual user-input errors, and empowers users in citizen science projects who may not be experts in the subject matter being surveyed.

Of course, this all depends on the accuracy of the model itself!

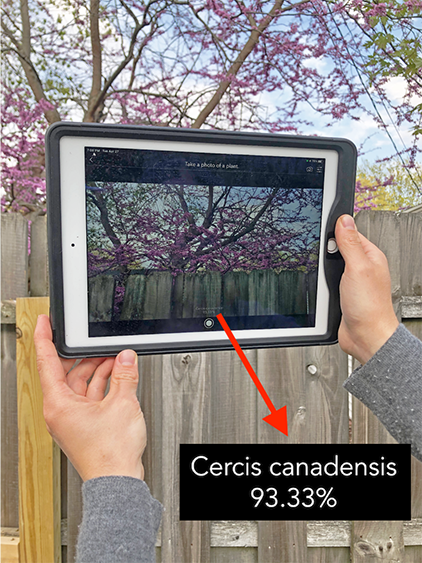

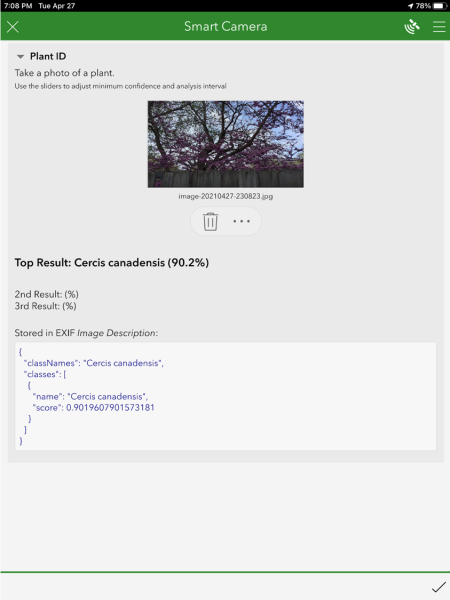

Last year Esri piloted a new capability of Survey123 to leverage AI models on mobile devices, enabling users to auto-populate survey answers by capturing an image on their device. To test this capability they trained an image classification model with 100 different North American plant species and thousands of images from PlantCLEF using the ArcGIS deep learning python libraries.

The model was exported in a mobile-specific format (TensorFlow Lite), which is easily packaged for use in Survey123. Image type questions in the survey can call the model, which consumes live camera images and outputs plant species with a degree of confidence in the classification. This output can then be used to populate survey answers, or conditionally show questions.

What was learned?

- The sample notebooks are accessible to beginners with no experience training machine learning models.

- Models can be created with just a few lines of code, and there are several parameters one can tune to improve training and accuracy.

- Your image classification model is only as good as your training data, and it takes time and compute resources to do the training. Be sure to incorporate sufficient example imagery to encompass the breadth and depth of your classes.

- Integration with Survey123 is simple and straightforward.

An example use case might be to leverage the plant identification model for a citizen science project. Users could be tasked with gathering information about plants that pollinators visit on campus, however, the users may not know enough to identify the plant species themselves. Instead, they could use their device’s camera and the model to identify the plant species and populate it in the survey form.

In addition to image classification models, like the one described above, can also leveraged object detection models, in which the location and count of objects within an image can be determined. For example, quickly inventory the number of bikes parked at a bike rack, or the type of signs present along a stretch of road.

Do you have a use case in mind? Would you like to try it yourself? The sample notebook for training an image classification model and tutorials for getting started with Survey123 are freely accessible to members of the University of Michigan community.

If you have any questions, you can reach us at esrisupport@umich.edu.