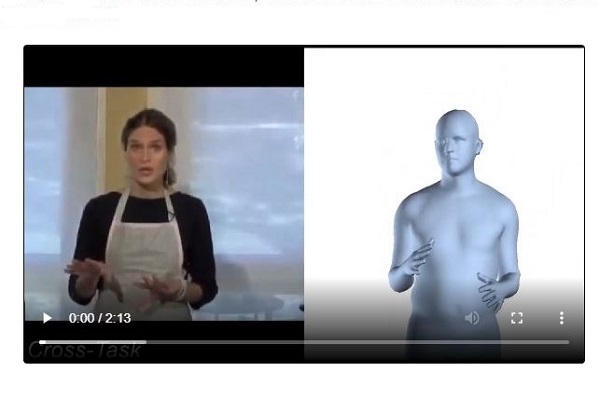

A field of study called human pose estimation focuses on teaching AI to identify how a person in an image or video is positioned. However, current models typically use videos with an entire person fully in view. Of the huge libraries of video content uploaded to public websites, only around 4% ever show an entire person.

New research at U-M can train neural network models to identify a person’s position in videos where only a portion of their body is visible in the shot. This breakthrough opens up a huge library of video content to a new use – teaching machines the meaning behind people’s poses, as well as the different ways they interact with their environment.